StoryFile Team

The importance of responsible artificial intelligence is becoming more apparent every day as AI becomes more integrated into society at large. The world of AI is moving at an incredible pace. The past year has seen new developments in AI technology that have made it possible to apply machine learning to a myriad of tasks – from helping doctors diagnose diseases more quickly, to making it easier for businesses to collect and interpret data. In many ways, this is a good thing—but it also presents some serious challenges. As we continue to discover more about AI’s capabilities, StoryFile continues to learn how best to manage impending risks, ensuring we remain a company that is authentic, innovative, and trustworthy.

“Success in creating effective AI, could be the biggest event in the history of our civilization. Or the worst.

We just don’t know. So, we cannot know if we will be infinitely helped by AI, or ignored by it or destroyed by it.”Stephen Hawking

There have been many past and recent examples of irresponsible AI, resulting in issues like biased algorithms that negatively impact marginalized groups of people. Look at the use case of Tay and Zo, two Microsoft chatbots released to the public on Twitter. Remember, AI can be a powerful tool to help automate routine tasks and make decisions that are more accurate than those made by humans alone.

But it’s important to note that AI is only as good as the data it’s trained on and the algorithms used to interpret that data. When it first debuted in March 2016, Microsoft’s Tay was engaging, witty and funny. Within 16 hours of its creation, Tay proved to be racist, sexist, and incredibly hateful. Users made their outrage known and Microsoft suspended Tay. Some blame this outcome on the toxicity of social media, others place the blame on poor design. Either way, and months later, Zo the chatbot was unveiled and remained active and available for interaction through 2019. Essentially, Zo was an upgraded version of the bot gone bad, Tay. At worst, if a user belittled, berated, or egged Zo on, she would end the conversation with an “i’m better than u bye.” What lessons were learned from this experiment, and will those lessons actually have an impact in the field? That remains to be seen.

Even the generative AI used In the development of storyfiles might provide suggestions that are inaccurate.

So, how does StoryFile avoid becoming a ‘Tay’?

Humans.

Storyfiles are developed by humans and any generative AI content is reviewed, approved, modified, or rejected by humans. The human element allows us to stay true to our values, committing us to being a trustworthy source for our users, clients, and storytellers.

StoryFile’s values are not only centered around authenticity and innovation but we are also committed to being a trustworthy source. Avoiding things like biased data sources, helps us earn the trust of our clients and users.

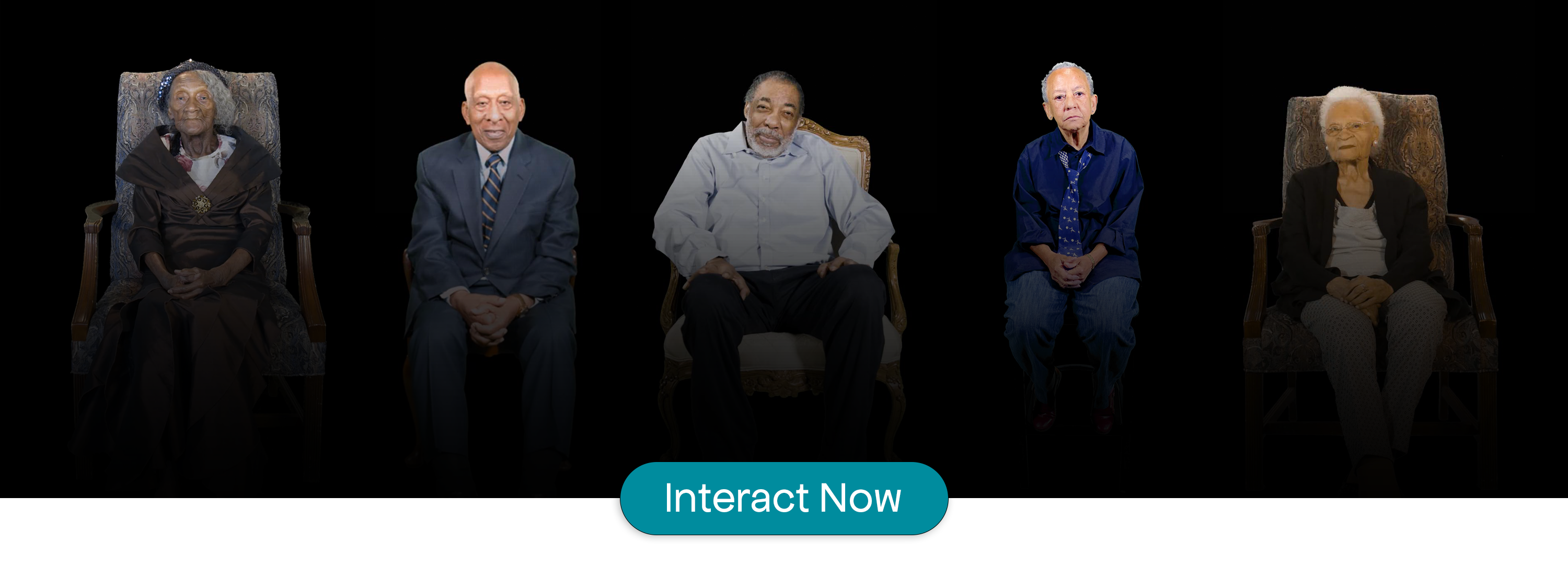

StoryFile not only believes stories must remain accurate and factual, but we also believe everyone should have the opportunity to tell their own personal stories in their own words. An example of this is our Black Voices Collection, made available on our website for the public in February of 2022. These storyfiles are educational, factual, and heartfelt. These are stories from those that lived them, a diverse group of voices – artists, allies, and civil rights activists.

StoryFile is proud to provide the ability to document and share these personal wisdoms, truths, human insights, and historical stories – all with the intention of informing, educating, and assisting in leading future generations. Visit our gallery of storyfiles to interact with many examples of why we created our Conversa platforms and conversational video AI technology in extremely thoughtful and responsible ways. As AI technology continues to develop, StoryFile continues to ensure our AI is driven by only reliable and honest information.